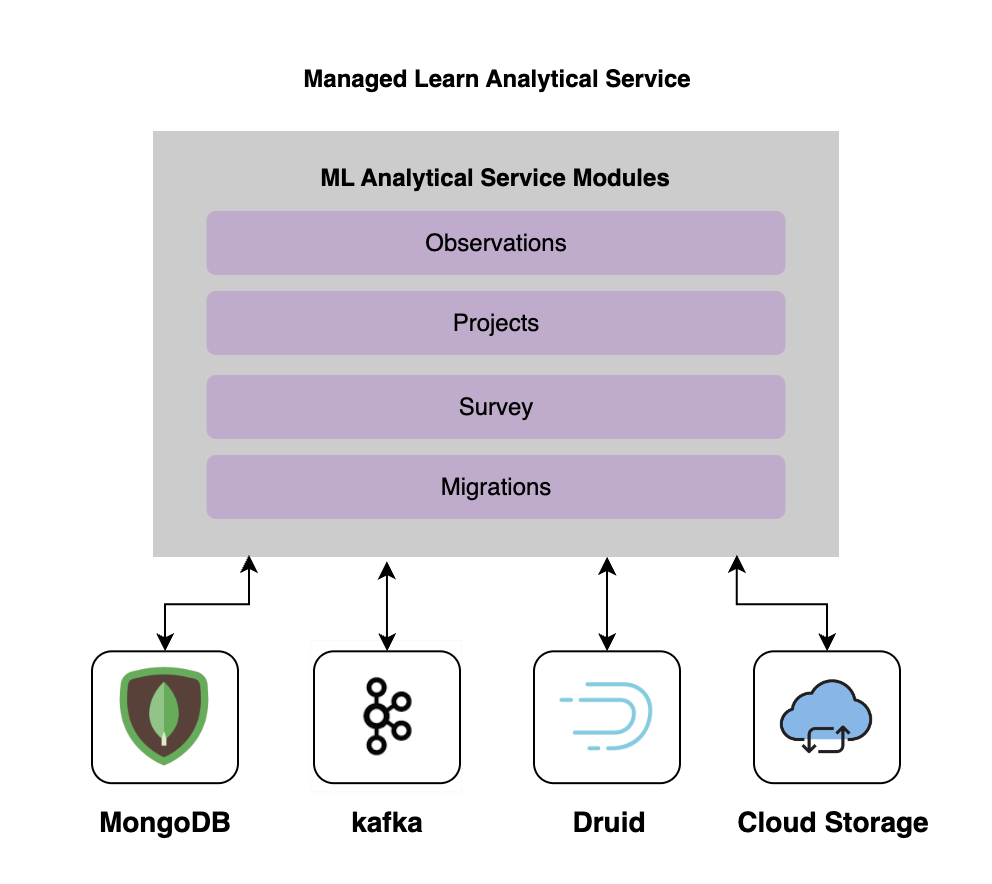

Component Diagram

GitHub Repository

The Ml-analytics service is constructed upon a framework that incorporates Kafka, MongoDB, Druid, and cloud storage. the ML-Analytics service collects data from MongoDB or Kafka, performs data transformation, and then transfers the refined data to either Cloud Storage or Kafka. This data is then made available in Druid for further analysis needs.

The Analytics Service is composed of three key modules,

Observations

The basically do the batch and real-time data ingestion.

Batch: Operating on a predefined schedule, it reads Observation-related data from MongoDB. The data is then transformed into a flattened structure before being loaded into Druid for further analysis.

Real-time: The system subscribes to a designated Kafka topic that holds observations. Upon receiving new observations, the system processes them and subsequently publishes the processed data to another Kafka topic. This processed data is then consumed by Druid for real-time analysis.

Projects

Batch: Operating on a predefined schedule, it reads project-related data from MongoDB. The data is then transformed into a flattened structure before being loaded into Druid for further analysis.

Survey

The basically do the batch and real-time data ingestion.

Batch: Operating on a predefined schedule, it reads survey-related data from MongoDB. The data is then transformed into a flattened structure before being loaded into Druid for further analysis.

Real-time: The system subscribes to a designated Kafka topic that holds a survey. Upon receiving the new survey, the system processes them and subsequently publishes the processed data to another Kafka topic. This processed data is then consumed by Druid for real-time analysis.

Migrations

Migration scripts are used to create, update or retire Charts and Reports in the respective environments.

Video on Analytics Service